Google has a new search algorithm, the system it uses to sort through all the information it has when you search and come back with answers. It’s called “Hummingbird” and below, what we know about it so far.

What’s a “search algorithm?”

That’s a technical term for what you can think of as a recipe that Google uses to sort through the billions of web pages and other information it has, in order to return what it believes are the best answers.

What’s “Hummingbird?”

It’s the name of the new search algorithm that Google is using, one that Google says should return better results.

So that “PageRank” algorithm is dead?

No. PageRank is one of over 200 major “ingredients” that go into the Hummingbird recipe. Hummingbird looks at PageRank — how important links to a page are deemed to be — along with other factors like whether Google believes a page is of good quality, the words used on it and many other things (see our Periodic Table Of SEO Success Factors for a better sense of some of these).

Why is it called Hummingbird?

Google told us the name come from being “precise and fast.”

When did Hummingbird start? Today?

Google started using Hummingbird about a month ago, it said. Google only announced the change today.

What does it mean that Hummingbird is now being used?

Think of a car built in the 1950s. It might have a great engine, but it might also be an engine that lacks things like fuel injection or be unable to use unleaded fuel. When Google switched to Hummingbird, it’s as if it dropped the old engine out of a car and put in a new one. It also did this so quickly that no one really noticed the switch.

When’s the last time Google replaced its algorithm this way?

Google struggled to recall when any type of major change like this last happened. In 2010, the “Caffeine Update” was a huge change. But that was also a change mostly meant to help Google better gather information (indexing) rather than sorting through the information. Google search chief Amit Singhal told me that perhaps 2001, when he first joined the company, was the last time the algorithm was so dramatically rewritten.

What about all these Penguin, Panda and other “updates” — haven’t those been changes to the algorithm?

Panda, Penguin and other updates were changes to parts of the old algorithm, but not an entire replacement of the whole. Think of it again like an engine. Those things were as if the engine received a new oil filter or had an improved pump put in. Hummingbird is a brand new engine, though it continues to use some of the same parts of the old, like Penguin and Panda

The new engine is using old parts?

Yes. And no. Some of the parts are perfectly good, so there was no reason to toss them out. Other parts are constantly being replaced. In general, Hummingbird — Google says — is a new engine built on both existing and new parts, organized in a way to especially serve the search demands of today, rather than one created for the needs of ten years ago, with the technologies back then.

What type of “new” search activity does Hummingbird help?

“Conversational search” is one of the biggest examples Google gave. People, when speaking searches, may find it more useful to have a conversation.

“What’s the closest place to buy the iPhone 5s to my home?” A traditional search engine might focus on finding matches for words — finding a page that says “buy” and “iPhone 5s,” for example.

Hummingbird should better focus on the meaning behind the words. It may better understand the actual location of your home, if you’ve shared that with Google. It might understand that “place” means you want a brick-and-mortar store. It might get that “iPhone 5s” is a particular type of electronic device carried by certain stores. Knowing all these meanings may help Google go beyond just finding pages with matching words.

In particular, Google said that Hummingbird is paying more attention to each word in a query, ensuring that the whole query — the whole sentence or conversation or meaning — is taken into account, rather than particular words. The goal is that pages matching the meaning do better, rather than pages matching just a few words.

I thought Google did this conversational search stuff already!

It does (see Google’s Impressive “Conversational Search” Goes Live On Chrome), but it had only been doing it really within its Knowledge Graph answers. Hummingbird is designed to apply the meaning technology to billions of pages from across the web, in addition to Knowledge Graph facts, which may bring back better results.

Does it really work? Any before-and-afters?

We don’t know. There’s no way to do a “before-and-after” ourselves, now. Pretty much, we only have Google’s word that Hummingbird is improving things. However, Google did offer some before-and-after examples of its own, that it says shows Hummingbird improvements.

A search for “acid reflux prescription” used to list a lot of drugs (such as this, Google said), which might not be necessarily be the best way to treat the disease. Now, Google says results have information about treatment in general, including whether you even need drugs, such asthis as one of the listings.

A search for “pay your bills through citizens bank and trust bank” used to bring up the homepage for Citizens Bank but now should return the specific page about paying bills

A search for “pizza hut calories per slice” used to list an answer like this, Google said, but not one from Pizza Hut. Now, it lists this answer directly from Pizza Hut itself, Google says.

Could it be making Google worse?

Almost certainly not. While we can’t say that Google’s gotten better, we do know that Hummingbird — if it has indeed been used for the past month — hasn’t sparked any wave of consumers complaining that Google’s results suddenly got bad. People complain when things get worse; they generally don’t notice when things improve.

Does this mean SEO is dead?

No, SEO is not yet again dead. In fact, Google’s saying there’s nothing new or different SEOs or publishers need to worry about. Guidance remains the same, it says: have original, high-quality content. Signals that have been important in the past remain important; Hummingbird just allows Google to process them in new and hopefully better ways.

Does this mean I’m going to lose traffic from Google?

If you haven’t in the past month, well, you came through Hummingbird unscathed. After all, it went live about a month ago. If you were going to have problems with it, you would have known by now.

By and large, there’s been no major outcry among publishers that they’ve lost rankings. This seems to support Google saying this is very much a query-by-query effect, one that may improve particular searches — particularly complex ones — rather than something that hits “head” terms that can, in turn, cause major traffic shifts.

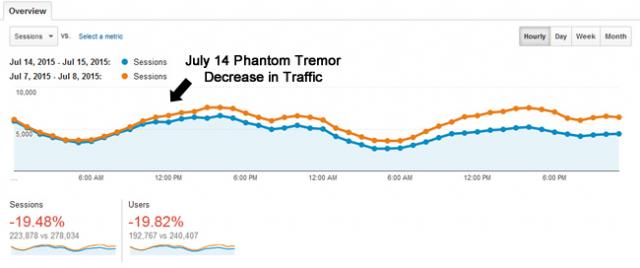

But I did lose traffic!

Perhaps it was due to Hummingbird, but Google stressed that it could also be due to some of the other parts of its algorithm, which are always being changed, tweaked or improved. There’s no way to know.

How do you know all this stuff?

Google shared some of it at its press event today, and then I talked with two of Google’s top search execs, Amit Singhal and Ben Gomes, after the event for more details. I also hope to do a more formal look at the changes from those conversations in the near future. But for now, hopefully you’ve found this quick FAQ based on those conversations to be helpful.

By the way, another term for the “meaning” connections that Hummingbird does is “entity search,” and we have an entire panel on that at our SMX East search marketing show in New York City, next week. The Coming “Entity Search” Revolution session is part of an entire “Semantic Search” track that also gets into ways search engines are discovering meanings behind words. Learn more about the track and the entire show on the agenda page.