When it comes to search engine optimization, columnist Mark Munroe suggests you need to think beyond your website.

Mark Munroe on August 7, 2015 at 10:34 am

How does one win at SEO in 2015 and beyond?

Some of the directives we have been hearing for years are truer than ever:

“Just create great content!”

“Content is king!”

“Build a quality site!”

But what is “great?” How do you measure “quality?”

You can’t evaluate content quality without considering the expectations of the user. It doesn’t matter how well the content is written if it’s out of sync with the user’s expectations. Great content, in the context of search, means you have moved beyond SEO to Search Experience Optimization (SXO).

The Search Experience Starts On Google And Ends On Google

Typically, user experience (UX) optimization focuses on optimizing success metrics. Perhaps those metrics are based upon converting users into buyers, gathering email addresses, generating page views or getting users to click on ads.

These are your metrics, not Google’s — they are your customers, not Google’s. With SXO, you need to focus on Google’s customer. This experience starts and ends on Google.

We have access to all sorts of metrics about our own site, some of them very clear (like a purchase) and some vague (like bounce rate). However, we have little access to how Google may be measuring results on their own site. It’s a deep black hole. To optimize the search experience, we must shed some light on that darkness!

You Can’t Always Get What You Want

We want users who are ready to buy, book, subscribe or otherwise take an action that’s good for our business. The following chart shows a hypothetical breakdown of how search visitors might interact with a website:

In this case, 60% of the users never take a single action. Did they get what they want? Who are these people? Why are they landing on your site?

A mere 10% took an action that can reasonably be viewed as a successful visit (a sign-up or a purchase). What about everyone else? Even those who didn’t bounce? Did they walk away from the site frustrated or happy?

We don’t always get the visitors we want. Search Experience Optimization means optimizing the user experience for the users we get, as well as the ones we want! Not only will that align with what Google wants, but a better understanding of all our users will help our business objectives, as well.

What Google Wants: Provide An Answer!

Except for navigational searches, almost all searches are questions, even when they are not phrased as such. Very simply, Google wants to provide answers — as evidenced by the increasing number of direct answers appearing within search results.

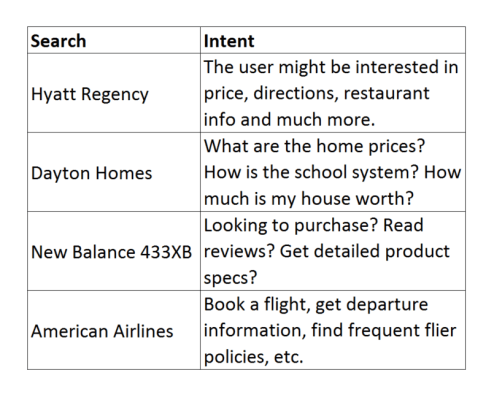

Consider the following searches:

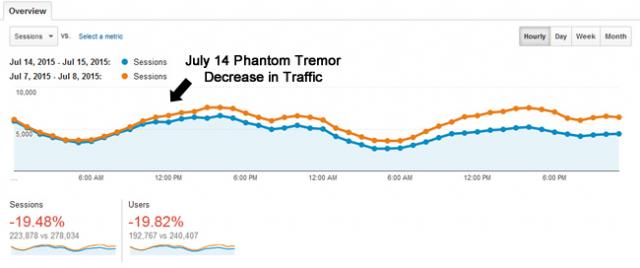

Google is successful when it provides an answer — but how does Google know if it has done so successfully, especially when the query is not obviously a question?

How Does Google Evaluate?

Obviously, Google has its own internal metrics to measure the quality of its search results. Just like our own sites, Google must have metrics based on what users click on — in fact, Google recently confirmed this.

It makes sense that Google analyzes click behavior. Likely and oft-discussed metrics it is looking at include:

- Short click. A “short click” is a quick return from a website to Google. Clearly, a very quick return is not a good signal.

- Long click. This refers to a long delay before the user returns to Google. Longer is better.

- Pogosticking. This is when a searcher bounces back and forth between several search results.

- Click-through rate. How often users click on a given result compared with how often it is displayed (expressed as a percentage).

- Next click. What a user clicks on after “pogosticking” back to Google (Either they click on an existing search listing or perform a new search).

- Next search. When a user moves on to a new search.

- Click rate on second search. When a previous page is elevated due to a personalized search and/or a previous click.

The Next Click

The most telling signal to Google may very well be the “next click.” If Google wants to provide the answer to a query, the next user click tells them what they need to know. Did the user find someplace to buy their New Balance running shoes? Or a review for the B&B in Napa?

If a user returns and clicks a different search result from the same query — or, upon a subsequent visit to Google, repeats the same query — that could be a signal that the initial search was not satisfied. If a user comes back and does a completely new search, that could mean the user was satisfied with the result.

Google has yet to confirm that click behavior directly influences rankings. It’s hard for me to imagine that it doesn’t. But even if it doesn’t affect rankings, Google likely uses it to influence and evaluate other changes to their algorithm. Either way, if the appearance of your site in Google’s SERP improves their metrics, that can only be fantastic for your organic search.

Kill The Search

Therefore, to optimize the search user experience, you must end the user quest and kill the search.

The user must have no reason to go back to Google and continue their quest for an answer. We will never know what that next click is. However, we can influence that next click by understanding our users.

To “kill the search,” we need to understand why users are landing on our page. What was the question or need that drove them to Google in the first place?

Consider a hotel site when a user searches for a specific hotel:

- Are they price shopping?

- Looking for reviews?

- In need of driving directions?

- Researching amenities?

Of course, we can make educated guesses. But we can do better.

Keyword Data

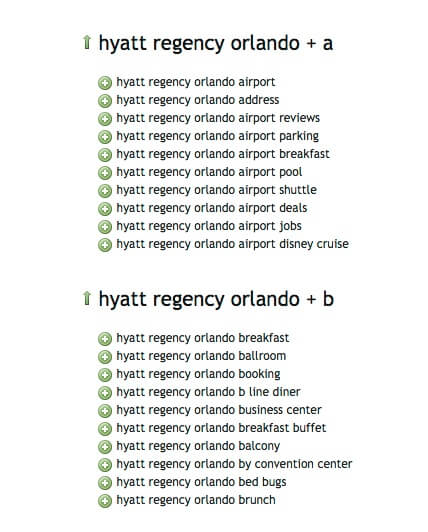

Keyword data is a good place to start. You can start by examining keyword data from Webmaster Tools (now rebranded as the Search Console) and looking for modifiers that reveal intent. Look at keywords for specific page types and high-traffic individual pages.

Many keywords will be vague and not reveal intent. If you are a travel site, for example, you might see “Hyatt Regency” 100 times with no modifiers and only 20 times with modifiers (such as “reviews,” “directions” or “location”). The frequency of those modifiers can give you a good idea of the broad questions users have when they land on your site.

This is only a starting point. There might be many user queries about which you have no data, simply because you do not rank for those queries. That’s when you need to go to keyword tools like SEMrush or the Google Keyword Planner. I also like to use UberSuggest to get a good overview of what the user mindset is. (Although it does not have query volume, it catches many variations you don’t see in the other tools.)

Keyword data is a good start toward getting into our users’ heads. But that’s only a start. Let’s take it further.

SEO Surveys

Surveys are fantastic tools to help you understand why people landed on your site. I’ve been doing SEO surveys for many years, using tools like SurveyMonkey and Qualaroo. Of course, surveys themselves are disrupting to the user experience, so I only keep them running long enough to reach statistical significance. I usually find 100 responses is sufficient. Things to keep in mind:

- You want to segment users based on search. This is an SEO survey, so it is only triggered for search visitors. (Of course, it’s useful to extend the survey to other segments, too.)

- The purpose of this survey is to understand why the user landed on your site. What was the question or problem that drove them to search?

- You need to trigger the survey very quickly. If you wait too long, you will have lost the opportunity to include the people who bounced very quickly (Those are particularly the people you want to catch!). Generally, I launch after 10 or 15 seconds.

- The surveys should be segmented by page type. For example, people landing on a hotel property page on a travel site have very different motives from those of people landing on a city/hotel page. For high-traffic content pieces, you want to survey those pages individually.

- Your survey segments should represent a significant portion of your SEO traffic.

Ask the users, “Why did you visit this site today?” and list different options for the reasons. Make sure you list an “other” to capture reasons you might not have thought of. For instance, on a real estate home sales site, I have asked if users were looking for:

- A home to buy

- A home to rent

- Home prices

- School information

- A house estimate

- Open houses

- Maps

Based on your survey data, you can create a prioritized list of user needs. Often, you will find surprises which can also turn into opportunities. For example, suppose you survey users who land on your real estate site on a “home for sale” page, and you discover that 20% would also consider renting. That could be a great cross-marketing opportunity.

Statistical Significance

You want to satisfy your users, but you can’t please everyone. You will need to prioritize your improvements so that they meet the needs of as large a percentage of your visitors as possible.

For example, if 25% of visitors to a restaurant page want to make a reservation, and they can’t (either because the functionality isn’t there or due to usability problems), you have an issue. If only 1% want driving directions, that is a much smaller issue.

10 Seconds Is All You Get

UX expert Jakob Nielsen performed an analysis on a Microsoft Research study a couple of years ago that showed that the largest visitor drop-off came in the first 10 seconds. If you get past 10 seconds, then users will give your site a chance. This means you have a maximum of 10 seconds to convince visitors that you:

- Have the answer to their question

- Have an answer that they can trust

- Will make it easy to get their answer

That’s a tall order, and your page design needs to balance many competing priorities. To design an effective landing page, you need to know what visitors’ questions are.

SEO Usability Testing

Usability testing is a great tool to help determine how successful users are at meeting all their goals. In 2015, it definitely should be considered part of an SEO’s role. If that task falls to the UX or product team, work with them to make sure your tests are covered. If not, then take the lead and feed the results back to those organizations.

For SEOs who don’t have experience with usability, I suggest Rocket Surgery Made Easy. Additionally, there are online services which provide valuable, lightweight and rapid test results. I’ve used both UserTesting.com (for more extensive tests) and FiveSecondtest.com for quick reactions from users. Here are some tips specific to SEO usability testing:

- Create a good SEO user scenario. Set the context and the objective. Start them on a search result page so you can observe the transition from search result page to your landing page.

- Focus your landing pages by using page templates that get the most traffic.

- Focus on the dominant problems, and use cases that you have identified by keyword analysis and surveys.

Consistent Titles & Meta Descriptions

If every search is a question, every result in the search results is a promise of an answer. Please make sure your titles are representative of what your site provides.

If you have reviews on 50% of your products, only mention that in the title and meta-descriptions of products that actually have reviews. Otherwise, you will be getting bad clicks and unhappy users. Another example is the use of the word “free.” If you say “free,” you’d better have “free!”

An Improved Web Product

The role of a successful SEO has broadened, and it demands that we understand and solve our visitors’ problems. It’s a huge challenge for SEOs, as we need to broaden our skill set and inject ourselves into different organizations. However, this is much more interesting and rewarding compared to link building.

Ultimately, the role of SEO will become even more critical within an organization, as the learnings and improvements will broadly benefit a website beyond just improving SEO. The great thing about the transition from Search Engine Optimization to Search Experience Optimization is that the end result is not just more traffic, it’s a better Web product.

Comments

Hummingbird

George, thanks for the

I would definitely agree with you that looking at longtail and question-based queries is definitely where SEO seems to be heading. There's a lot of great stuff out there right now on semantic search and I think it's a great way to meet the new challenges we face after Hummingbird and the advent of 100% (not provided).

Hummingbird